Embracing Innovation in CI/CD Pipelines: A Shift From Traditional Practices

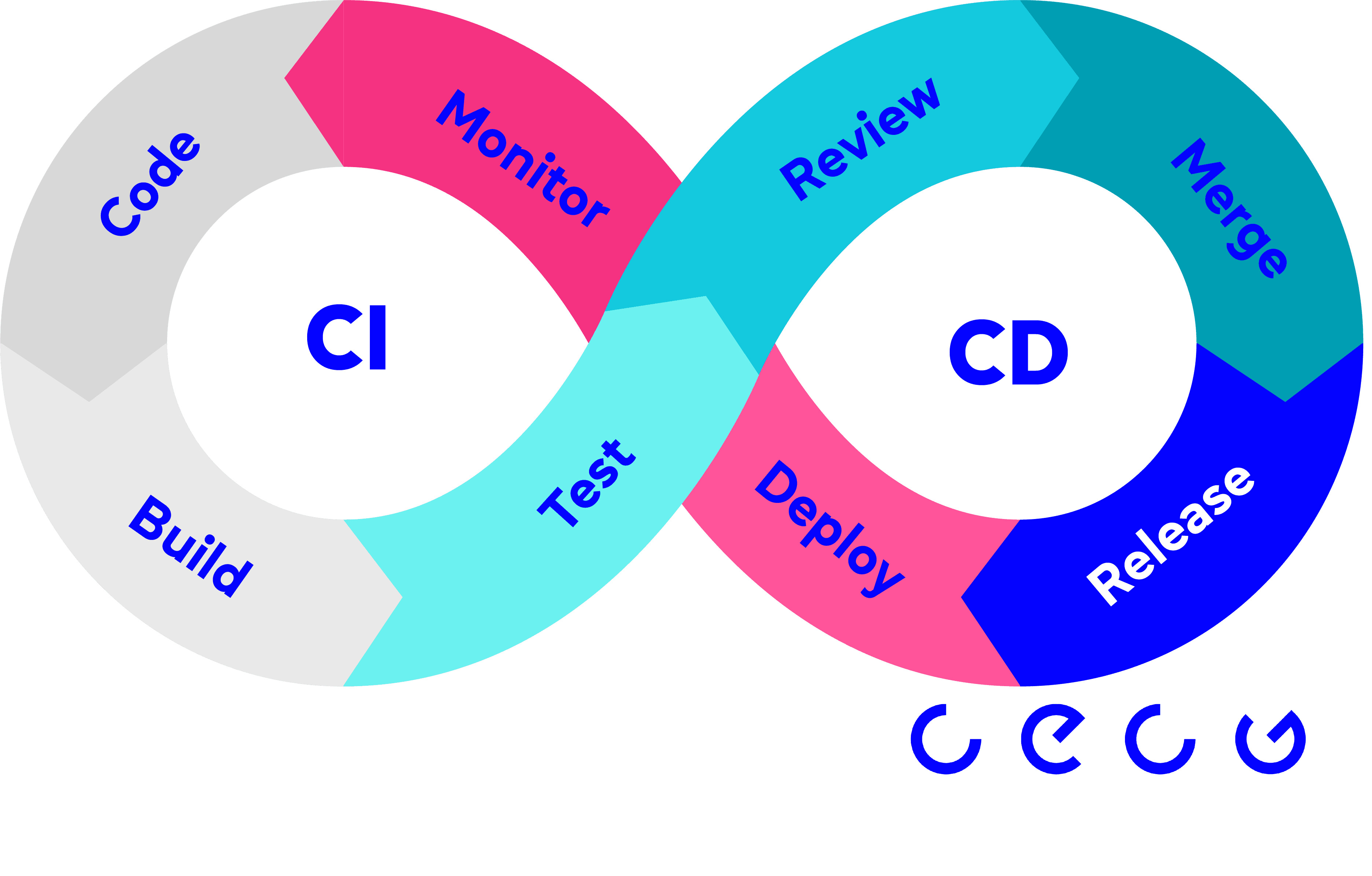

The software development industry has long-established practices and tools for Continuous Integration and Continuous Deployment (CI/CD). However, evolving discussions and innovations point to the need for revisiting and revising these traditional methods. This article explores emerging trends in CI/CD pipelines, advocating for more dynamic and adaptable approaches that challenge conventional processes.

Rethinking CI/CD Pipelines

CI/CD pipelines, traditionally automated sequences triggered remotely by code commits, often overlook the significant amount of development work happening locally. This can cause delays in critical feedback, with developers sometimes waiting for remote systems to flag issues that could have been identified earlier during local development.

Small errors or oversights in code, common in the development process, can lead to pipeline failures and consume resources. Implementing local pipeline execution capabilities addresses this challenge head-on. It empowers both developers and DevOps support engineers to test and rectify issues more efficiently, improving the overall development cycle and reducing costs.

Main Propositions for CI/CD

CI Pipelines Shouldn't Be Remote-Only: Adapting pipelines to function both locally and remotely provides more immediate feedback and enhances development agility.

Simplifying Orchestration: Streamlining the orchestration of tasks in pipelines to avoid unnecessary complexity.

Beyond Declarative Formats: Encouraging a move away from solely relying on declarative formats like YAML, which may not be sufficient for defining complex operational tasks.

Tool Independence: Fostering less reliance on specific tools within pipelines for greater flexibility and adaptability.

Proposing a Script-Based Approach

The integration of script-based approaches in CI/CD pipelines involves transforming various pipeline tasks into executable scripts, such as build.sh for building, unit-test.sh for unit testing, and deploy.sh for deployment. This method presents a practical solution, as it enables the execution of these tasks in both local and remote environments. The key benefits include providing immediate feedback to the development team and reducing redundancy in task execution.

Such a script-based approach ensures that tasks are standardised, making them more predictable and easier to manage. This can be particularly beneficial in complex projects where consistency in execution across different environments is crucial.

Challenges with a Script-Only Approach

Despite its benefits, a script-based approach in CI/CD pipelines is challenging. One of the primary concerns is the efficient orchestration of these scripts in both local and remote environments. In a local setting, developers need to execute these scripts in a way that replicates the remote environment as closely as possible. This requires a deep understanding of the pipeline's workings and the ability to simulate different conditions and dependencies that exist in the remote server.

In remote settings, challenges include ensuring that scripts are compatible with the server environment and that they can be integrated seamlessly with other parts of the CI/CD pipeline.

Another concern is avoiding over-complication. While scripts offer flexibility, there's a risk of creating convoluted patterns that are difficult to understand and maintain. It's crucial to strike a balance between the versatility that scripting offers and maintaining clarity and simplicity in the pipeline's operations.

Moreover, teams need to consider error handling and logging within these scripts. Effective error handling ensures that scripts can gracefully recover or provide meaningful feedback when something goes wrong, which is vital for troubleshooting and maintaining a smooth workflow.

The Imperative vs. Declarative Debate

In the evolving landscape of CI/CD, a significant shift is occurring from declarative formats towards imperative programming. This shift addresses some inherent limitations in declarative approaches and opens new possibilities for managing CI/CD tasks.

Understanding Declarative Formats

Declarative formats, commonly used in tools like YAML or JSON, are excellent for defining the desired state of a system. They are straightforward, human-readable, and allow developers to specify 'what' they want without detailing 'how' to achieve it. This simplicity is particularly beneficial in configuration management and defining infrastructure as code.

However, declarative formats have limitations, particularly when it comes to detailed operational tasks. They often struggle with:

Complex Conditional Logic: Implementing complex conditional flows and decision-making processes can be cumbersome and less intuitive in declarative formats.

Dynamic Behaviour: Adapting to changing conditions or requirements dynamically is often challenging with declarative specifications.

Visibility and Debugging: Understanding the flow of execution and debugging can be harder, as the declarative syntax abstracts the underlying operations.

Advantages of Imperative Programming

Imperative programming, using languages like Go, Python, or JavaScript, offers a more granular level of control over CI/CD processes. It enables developers to specify not only what they want to achieve but also how to achieve it. This approach is advantageous for several reasons:

Detailed Control Flow: Imperative languages excel at expressing complex logic, conditions, and loops. This allows developers to precisely control the flow of operations in their CI/CD pipelines.

Dynamic Adaptation: With imperative programming, it's easier to implement dynamic behaviour based on runtime data, user inputs, or external events.

Error Handling: More sophisticated error handling can be implemented, allowing pipelines to respond intelligently to unexpected situations.

Extensibility: Imperative scripts can be easily extended or modified to incorporate new requirements or changes in the development process.

Modern CI/CD pipelines operate in complex environments where the availability of specific tools can vary greatly. This variability poses a significant challenge, as pipelines must be adaptable to different environments without assuming the presence of certain tools. Addressing this challenge involves two key strategies: leveraging containerization and adopting versatile tools like Dagger.

Embracing Containerization

Containerization has emerged as a pivotal solution to the tooling challenges in CI/CD pipelines. By encapsulating tasks in containers, pipelines can execute these tasks without relying on the presence of specific tools in the underlying environment. This approach offers several benefits:

Consistency Across Environments: Containers package not just the application, but also its dependencies, ensuring that it runs consistently across different environments.

Portability: Containers can be moved easily across environments - from a developer's local machine to production servers - without needing any changes.

Isolation and Security: Containers provide an isolated environment for each task, enhancing security and reducing the risk of conflicts between different tasks or processes.

Scalability and Resource Efficiency: Containers can be quickly spun up and down, making it easier to scale applications and efficiently use resources.

Introducing Dagger: A Hybrid Solution

In this context,

Dagger emerges as a particularly effective tool for modern CI/CD pipelines. Dagger stands out for its ability to adapt to both local and remote environments, a crucial feature in today's diverse development landscapes. Its key features include:

Support for Local and Remote Execution: Dagger facilitates the execution of CI/CD tasks both locally and on remote servers, offering a consistent experience for developers and operators.

Programming Language Flexibility: Dagger supports task definitions in various programming languages, providing flexibility and allowing teams to use languages they are already familiar with.

Containerization Capabilities: By leveraging containerization, Dagger minimises the dependency on pre-installed tools, making it easier to set up and manage pipelines in different environments.

Ease of Integration: Dagger can be integrated with existing CI/CD workflows and tools, allowing teams to adopt it without overhauling their current systems.

Expanding the Role of Dagger in CI/CD

Dagger's role in CI/CD can be expanded to address specific pipeline challenges:

Custom Task Definition: Teams can define custom tasks in Dagger using their preferred programming language, which is particularly useful for complex or unique pipeline requirements.

Pipeline as Code: With Dagger, pipelines can be defined as code, making them more maintainable, versionable, and testable.

Dagger Approach

Dagger, in the realm of CI/CD, stands out for its ability to execute a wide array of tasks efficiently and seamlessly. This tool brings a new level of versatility and adaptability to modern CI/CD practices, addressing common challenges and streamlining workflows.

Intelligent Caching Mechanism

One of Dagger's standout features is its intelligent caching system. This system:

Speeds Up Builds: By caching previously built components, Dagger can significantly reduce build times, especially for large projects with numerous dependencies.

Reduces Redundancy: Dagger intelligently determines which parts of the build process can be reused, avoiding unnecessary repetitions and saving resources.

Enhances Efficiency: This caching mechanism makes the overall CI/CD process more efficient, as it streamlines the build and deployment phases.

Seamless Integration with Existing Workflows

Dagger's design allows for easy integration with existing CI/CD workflows, such as GitHub Actions, Jenkins, GitLab CI etc.:

CI/CD Tools Compatibility: Dagger can be incorporated into existing CI/CD tools like GitHub Actions, allowing developers to use both tools in tandem for more comprehensive CI/CD pipelines.

Minimising Transition Effort: The ease of integration means that adopting Dagger does not require a complete overhaul of existing CI/CD practices.

Environment Agnostic: Dagger's containerized approach means it can run tasks in any environment that supports containers, from local developer machines to various cloud platforms.

Consistent Execution: Regardless of where the pipeline is running, containerization ensures that tasks execute in a consistent environment, reducing the "works on my machine" problem.

Isolation and Security: Tasks run in isolated containers, enhancing security and minimising the risk of conflicts or contamination between different parts of the pipeline.

Looking Ahead

As the industry continues to evolve, the focus should be on enhancing the adaptability and efficiency of CI/CD pipelines. This includes:

Incorporating Local and Remote Execution Seamlessly: Achieving a balance where CI/CD tasks are executed effectively in both local and remote environments.

Embracing Programming Languages for Task Definition: Utilising the power of imperative programming to articulate complex CI/CD tasks, thus offering greater control and flexibility than traditional declarative approaches.

Standardising Containerization: Adopting containerization as a norm ensures that CI/CD pipelines are independent of specific environments, reducing reliance on particular tools and setups.

Continuous Learning and Adaptation: Keeping abreast of emerging tools and practices in the CI/CD field to continually refine and optimise workflows.

Final Thoughts

At CECG, our vision is centred on transforming software development by focusing on enhancing development and operational efficiency through Continuous Integration/Continuous Deployment (CI/CD). We see CI/CD not just as a process, but as a practical tool for driving significant improvements in key metrics such as development speed, reliability, and mean time to deliver or fix issues.

Our approach is grounded in the belief that real progress is achieved by consistently improving these tangible aspects of software engineering. This commitment positions us at the forefront of turning everyday challenges into opportunities for measurable growth and advancement in the field.

We recognize that the landscape of software development is ever-evolving. In response, our strategies are not static but dynamic, adapting to the changing needs of the industry and our clients. This adaptability is not an afterthought—it's a key component of our DNA. We strive to anticipate the future, ensuring that our CI/CD practices are not just relevant today but are paving the way for the developments of tomorrow.

At CECG, efficiency and agility are more than goals; they are the pillars upon which we build our success. Our CI/CD pipelines are engineered to be robust yet flexible, ensuring that we can move quickly without compromising on quality or performance.

This article is provided as a general guide for general information purposes only. It does not constitute advice. CECG disclaims liability for actions taken based on the materials.